Chenghao Qian

Github Github |  Linkedin Linkedin |  Google Scholar Google Scholar |  Gallery Gallery |

Hi!👋 I am currently pursuing a Ph.D. at Virtuocity. Prior to this, I completed my master’s degree at the University of Sydney and had years’ experience working in the fields of robotics and autonomous driving at Parallel Domain, XPENG and UBTech. My overarching goal is to enable robots to operate reliably across any location, under any weather, at any time— or even one day, jump on the moon.🚀

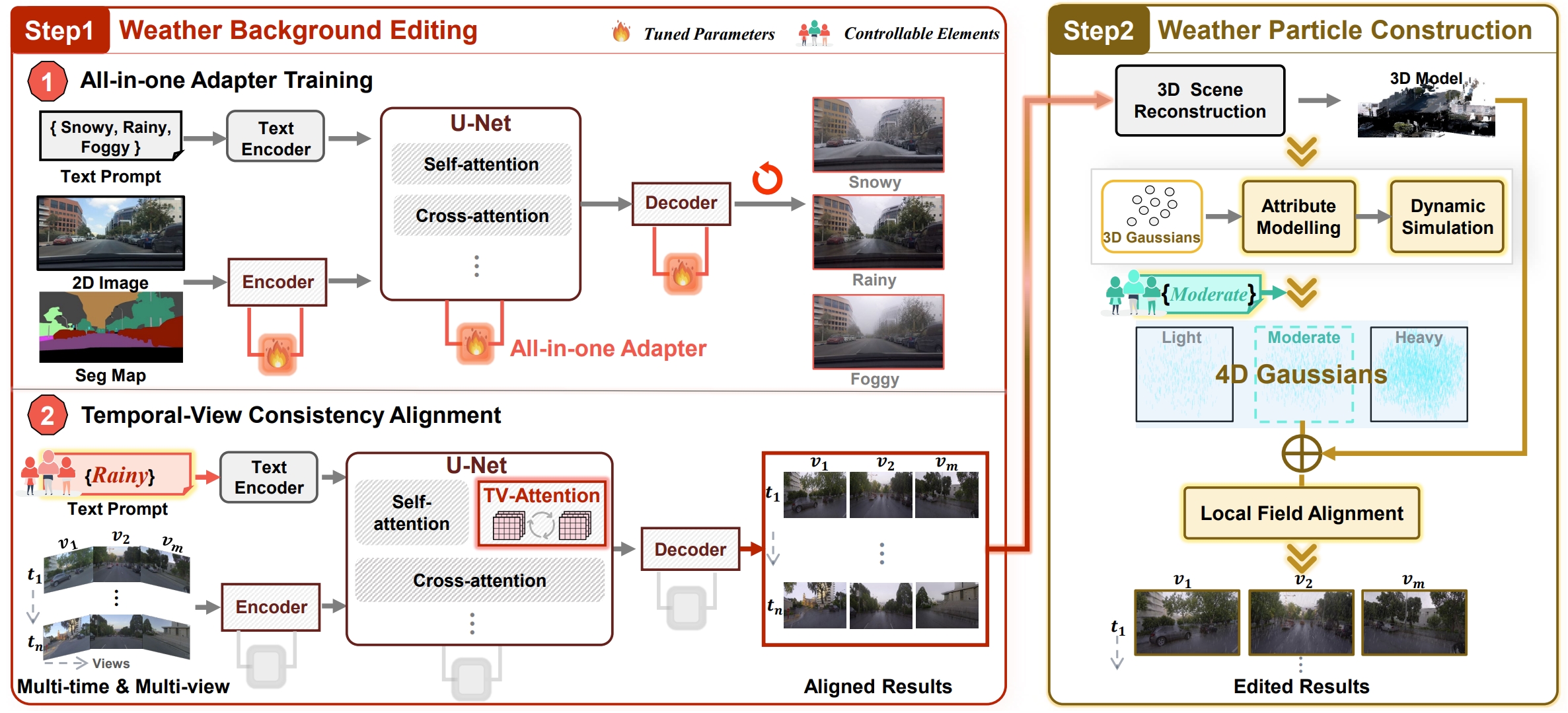

My current research aims to tackle the core challenges of autonomous driving in adverse weather. To address this, I focus on:

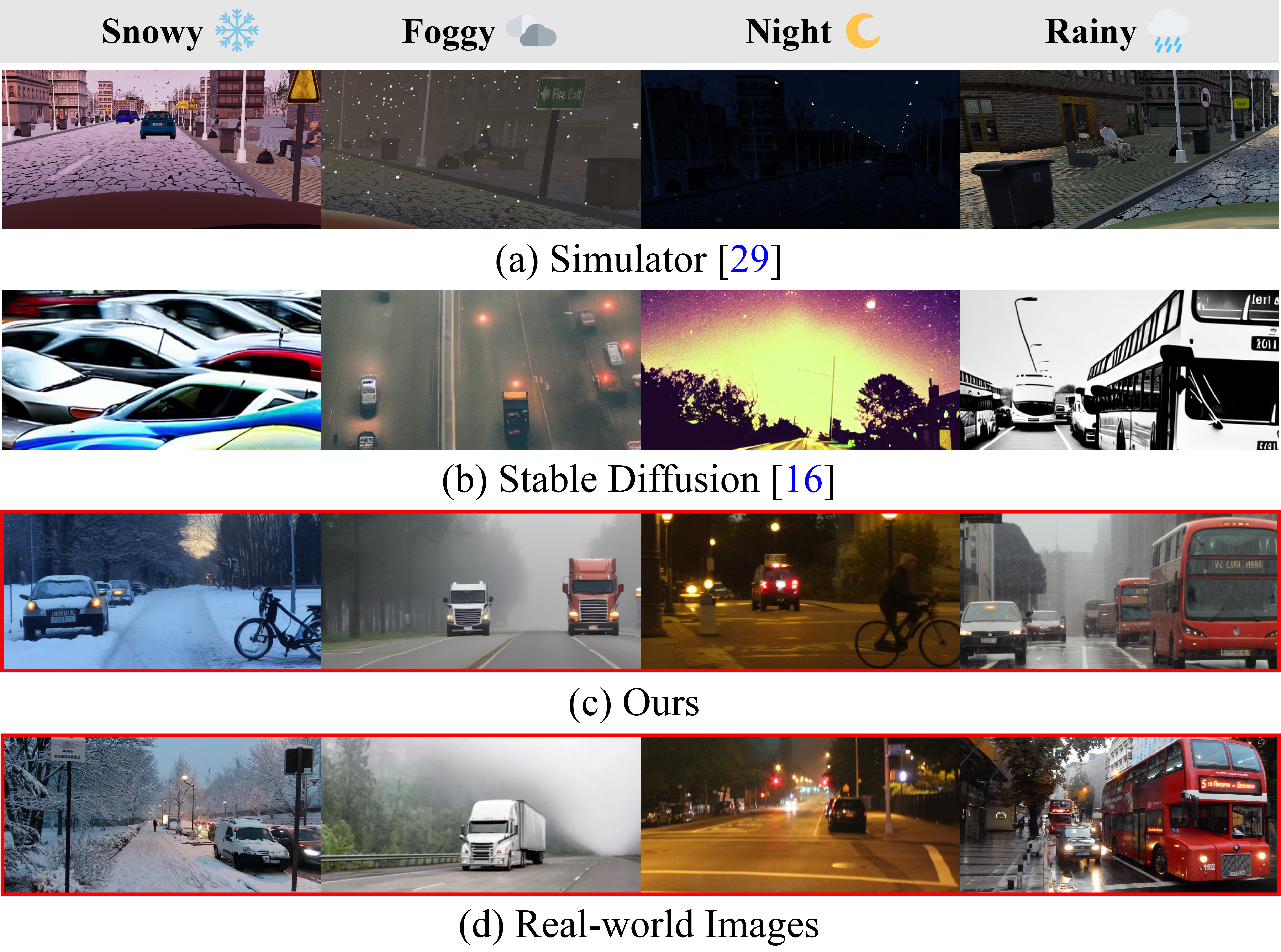

- Generative Modelling: Using generative frameworks to synthesize long-tailed, safety-critical driving scenes and mitigate visual degradation for dataset enrichment and visual clarity.

- Scene Simulation: Developing high-fidelity simulations that replicate challenging scenarios to support foundation model training and validation.

If you have any questions about my research or would like to collaborate, please feel free to email me at ![]() tscq@leeds.ac.uk. I’d be glad to connect and chat! Beyond research, I’m into photography, basketball, and skateboarding, each offering a different lens on how I see the world.

tscq@leeds.ac.uk. I’d be glad to connect and chat! Beyond research, I’m into photography, basketball, and skateboarding, each offering a different lens on how I see the world.

news

| Feb 05, 2026 | I’m co-organizing CVPR 2026 LoViF Workshop. Please join us! |

|---|---|

| Nov 08, 2025 | One paper got accepted by AAAI 2026! |

| Oct 22, 2025 | Presented our paper at IROS 2025! |

| Sep 15, 2025 | One paper got accepted by ICCV Workshop on Generating Digital Twins from Images and Videos. |

| Jul 06, 2025 | Attended the International Computer Vision Summer School (ICVSS). An Inspiring and enjoyable experience! |

| Mar 27, 2025 | Invited by the Institute for Safe Autonomy, University of York, to give a talk on "Weather Challenges in Autonomous Driving Perception Systems." |

| Mar 21, 2025 | Invited by Auto-Driving Heart to give a livestream talk. |

| Mar 19, 2025 | One paper accepted to IEEE RA-L. |

| Jan 27, 2025 | One paper got accepted by IEEE ICRA 2025. |

| Dec 05, 2024 | AllWeatherNet received the 🌟Overall Best Student Paper Award (1 out of 2106) at ICPR 2024. |

Publications

2026

- AAAI

2025

- ICCV-W

- ICRA

3D Scene Reconstruction in Adverse Weather Conditions via Gaussian SplattingIEEE International Conference on Robotics and Automation, 2025

3D Scene Reconstruction in Adverse Weather Conditions via Gaussian SplattingIEEE International Conference on Robotics and Automation, 2025 - RA-L

LLM-assisted procedural weather generation for domain-generalized semantic segmentationIEEE Robotics and Automation Letters, 2025

LLM-assisted procedural weather generation for domain-generalized semantic segmentationIEEE Robotics and Automation Letters, 2025

2024

- ICPR

🌟 Best Paper Award (1/2106): Unified Image Enhancement for Autonomous Driving Under Adverse Weather and Low-Light ConditionsIn International Conference on Pattern Recognition, 2024

🌟 Best Paper Award (1/2106): Unified Image Enhancement for Autonomous Driving Under Adverse Weather and Low-Light ConditionsIn International Conference on Pattern Recognition, 2024

Services

Reviewer

Conference/Workshop:

- IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)

- ACM SIGGRAPH Asia (SIGGRAPH Asia)

- AAAI Conference on Artificial Intelligence (AAAI)

- IEEE International Conference on Robotics and Automation (ICRA)

- International Conference on Pattern Recognition (ICPR)

- Workshop on Embodied World Models for Decision Making (NeurIPS Workshop)

- Workshop on Generating Digital Twins from Images and Videos (ICCV Workshop)

Journal:

- International Journal of Computer Vision (IJCV)

- IEEE Transactions on Visualization and Computer Graphics (TVCG)

Tutor

COMP3631 Intelligent Systems and Robotics, University of Leeds

COMP2811 User Interface, University of Leeds